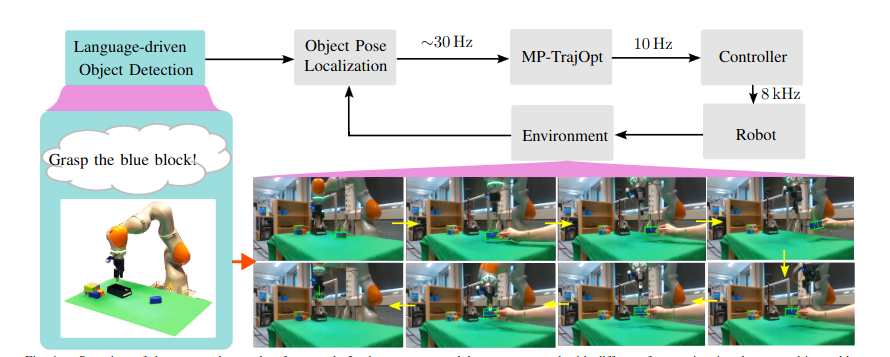

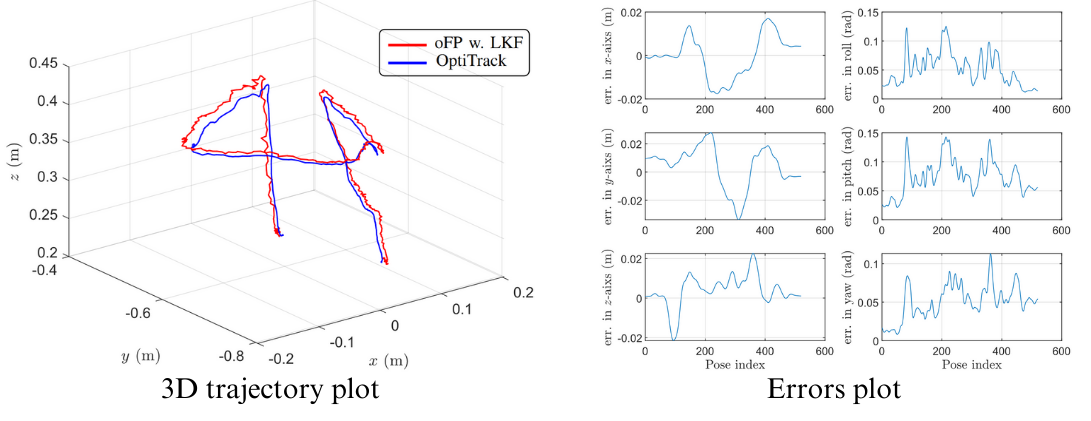

Combining a vision module inside a closed-loop control system for the seamless movement of a robot in a manipulation task is challenging due to the inconsistent update rates between utilized modules. This is even more difficult in a dynamic environment, e.g., objects are moving. This paper presents a modular zero-shot framework for language-driven manipulation of (dynamic) objects through a closed-loop control system with real-time trajectory replanning and an online 6D object pose localization. By leveraging a vision language model via natural language commands, an object is segmented within 0.5s. Then, guided by natural language commands, a closed-loop system, including a unified pose estimation and tracking and online trajectory planning, is utilized to continuously track this object and compute the optimal trajectory in real time. This provides a smooth trajectory that avoids jerky movements and ensures the robot can grasp a non-stationary object. Experiment results exhibit the real-time capability of the proposed zero-shot modular framework for the trajectory optimization module to accurately and efficiently grasp moving objects, i.e., up to 30Hz update rates for the online 6D pose localization module and 10Hz update rates for the receding-horizon trajectory optimization. This highlights the modular framework's potential applications in robotics and human-robot interaction.

Prompt:"Grasp the metal part"

Prompt:"Grasp the orange block"

Prompt:"Grasp the plier"

Prompt:"Grasp the scissor"

Prompt:"Grasp the line stripper"

Prompt:"Grasp the timer"

Prompt:"Grasp the eraser"

Prompt:"Grasp the black tool"

Prompt:"Grasp the drill"

Prompt:"Grasp the silver block"

@article{NGUYEN2025103335,

title = {Language-driven closed-loop grasping with model-predictive trajectory optimization},

journal = {Mechatronics},

volume = {109},

pages = {103335},

year = {2025},

issn = {0957-4158},

doi = {https://doi.org/10.1016/j.mechatronics.2025.103335},

url = {https://www.sciencedirect.com/science/article/pii/S0957415825000443},

author = {H.H. Nguyen and M.N. Vu and F. Beck and G. Ebmer and A. Nguyen and W. Kemmetmueller and A. Kugi},

keywords = {Language-driven object detection, Pose estimation, Grasping, Trajectory optimization},

}We borrow the page template from Nerfies project page. Special thanks to them!

This website is licensed under a Creative

Commons Attribution-ShareAlike 4.0 International License.